From Infrastructure Scaling to Team Empowerment: Your Cloud & SRE Transformation Framework

- John Adams

- Aug 22, 2025

- 9 min read

The Inevitable Crossroads: Why Technical Expertise Alone Isn't Enough for Successful Cloud/SRE Scaling

Ah, the cloud. It arrived like that great British tea party – initially met with skepticism, then eagerly embraced as the elixir of digital transformation. And rightly so; it offers scalability and resilience unlike anything before. But let's be honest: scaling infrastructure in a multi-cloud world isn't just about throwing more servers at the problem (unless you're debugging an exceptionally stubborn application). It’s like trying to unclog a system with too many moving parts – complex, fragile, and prone to unexpected leaks.

Many organizations rush into Kubernetes clusters or serverless functions without considering the human element. They focus on tools: Terraform for provisioning, Ansible for configuration management, maybe some fancy cloud-native monitoring tool. But if you don't have people who understand these technologies and are empowered to use them effectively, it's just expensive fluff. Remember that old joke about building a better mousetrap? Everyone wants one, but nobody knows how to build it without stepping on the cat first.

This is where Site Reliability Engineering (SRE) comes in – not as another pointy-haired manager position, but as a philosophy demanding both technical depth and mature operational leadership. SRE requires the right skill sets: developers who understand infrastructure needs, operators comfortable with automation, and data-savvy engineers to navigate observability mountains. But without fostering an environment where collaboration flows freely between development and operations teams, you're just building castles in the air.

The true crossroads lie in balancing technical rigor with people-centric leadership. This isn't about choosing one over the other; it's about finding that sweet spot where processes empower individuals to deliver reliable services at scale. We need a framework – something that brings clarity amidst chaos and helps teams navigate their way through this transformation journey effectively.

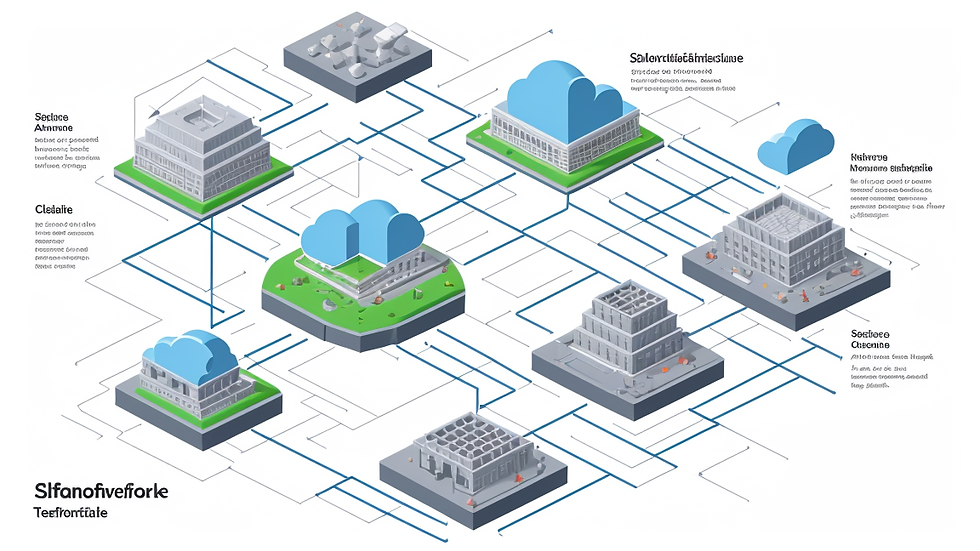

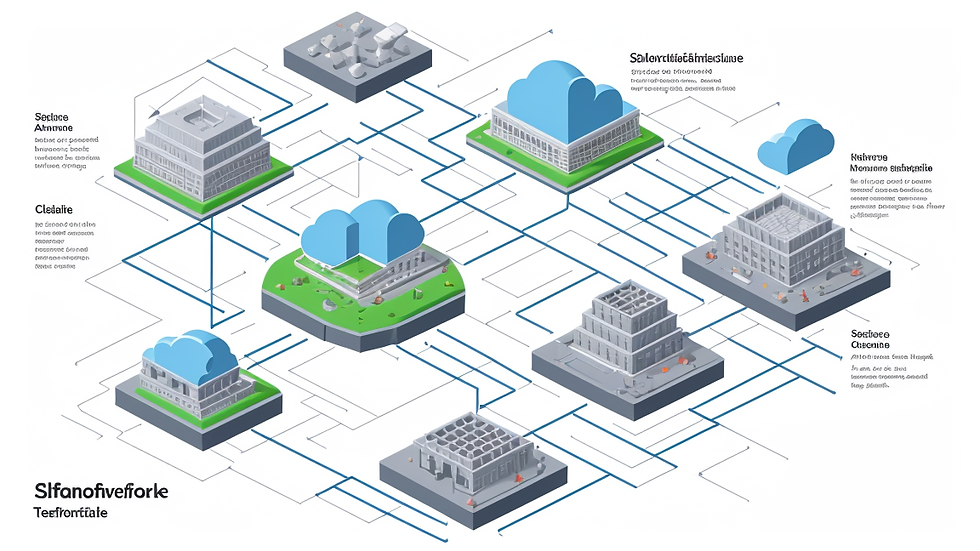

Introducing the Framework: Bending the Curve of Complexity with Strategic Leadership

So, let me introduce you to what I call the "Three Pillars" approach for bridging infrastructure scaling and team empowerment. Think of it less as a rigid set of rules and more as a dynamic architecture – like those cool network diagrams we used to draw back in the day. The goal here isn't just technical proficiency but creating an environment where teams can thrive.

This framework is built on three fundamental principles, designed synergistically:

Technical Foundations: This deals with setting up robust automation and observability systems so that reliability becomes inherent in how services are built and deployed.

Team Dynamics & Operational Health: How we structure work, empower people, manage risk, and foster a culture where everyone shares responsibility for service reliability isn't just about preventing fires – it's about making them less frequent and more manageable.

AI Integration as Accelerator: Leveraging artificial intelligence to enhance operational efficiency beyond traditional automation, helping teams focus on complex issues while machines handle the simple ones.

The beauty of this framework lies in its flexibility. We can apply these principles incrementally across different domains (networking, storage, application deployment) or scale them up for entire organizational transformations. It's about bending that ever-upward curve of complexity through smart technical choices and effective leadership strategies.

Pillar One: Technical Excellence - Building Robust CI/CD, Observability, and Networking Foundations

This first pillar is where we tackle the plumbing – the underlying systems that must work flawlessly for everything else to function properly. We need automated processes that handle change reliably and continuously monitor our complex distributed systems. Forget manual interventions; they're inefficient and error-prone.

Automated Infrastructure Delivery: Beyond Basic Provisioning

Let's face it, writing YAML files or using `terraform apply` is just the beginning of managing infrastructure at scale. What we truly need are:

Robust CI/CD Pipelines: These aren't just for code deployment. They must include automated testing (unit, integration, chaos), security scanning, and infrastructure validation across your chosen cloud platforms (be it AWS, Azure, GCP). Think of it as building a continuous delivery pipeline that doesn't just deploy the app – it deploys the whole system. This requires mature tooling but also clear processes defining what gets deployed, when, and how.

Immutable Infrastructure Principles: Treat servers or containers like throwaway objects. Provision them consistently from templates (like Docker images) and replace them entirely upon updates rather than patching existing ones. It prevents state drift – that sneaky culprit more dangerous than a misplaced comma in configuration code.

Proper networking is non-negotiable too. We're building complex systems, not just deploying boxes into an empty room:

Well-Designed VPC/Cloud-Native Network Topologies: This isn't trivial. You need to consider segmentation (public vs private), routing protocols suited for the environment, efficient load balancing strategies (especially at scale). Think about using service meshes like Istio or Linkerd – they handle internal communication reliability and security much better than manual setup.

Automated Security Controls: Security groups, network ACLs, firewalls need to be versioned and managed alongside code. Tools that integrate with your CI/CD pipeline can automatically update these rules when infrastructure changes occur.

The key here is consistency. We must have repeatable processes for provisioning, deploying, testing, and managing infrastructure components so they behave predictably – regardless of who triggers the change or where it happens in our distributed cloud landscape.

Pillar Two: Team Dynamics & Operational Health - Fostering a High-Performance SRE Culture

Okay, let's talk people. This pillar is crucial because no matter how robust your technical foundation becomes, if you don't have teams ready to leverage and maintain them effectively, the whole system collapses like an improperly bolted server rack.

Breaking Down Silos: The DevOps/SRE Mindset Shift

We're not just talking about hiring more SREs. It's about changing the culture – moving from finger-pointing when things go wrong (ops blames dev for bad code, dev blames ops for slow deployments) to collaborative ownership where everyone shares responsibility.

This involves:

Defining Clear Service Level Objectives (SLOs): Forget vague targets like "99.9% uptime." SLOs need to be specific, measurable, and understood by everyone on the team – from developers writing code to product managers defining requirements. They provide a common language for discussing reliability.

Implementing Blameless Postmortems: This is foundational! We need processes where teams analyze failures without assigning blame, focusing instead on understanding root causes and implementing preventive measures. It builds trust rather than defensiveness over time.

Designing Effective Workflows

How do we structure the work to support this culture?

SRE Ownership of Operations Tasks: While developers should be aware of operational impacts (latency, errors), SREs need to take ownership of managing the operational stability of services they help design and build. Think about capacity planning – it's crucial for scaling systems effectively.

On-Call Rotation Principles: We must manage incident load humanely. Rotations should consider seniority cycles, time zones if distributed, but most importantly, ensure fairness so no single person gets burned out on constant firefighting.

Fostering Continuous Improvement

This isn't a one-time migration project – it's an ongoing journey:

Regular Feedback Loops: Short cycles for planning (daily standups), continuous integration testing, and rapid deployment allow teams to quickly identify problems and make course corrections. This includes technical debt reduction loops.

Skill Development and Cross-Training: We need engineers who can understand both application code and infrastructure operations – developers with some sysadmin skills or SREs learning about the business needs driving features.

Ultimately, Pillar Two is about creating an environment where teams feel empowered to make changes confidently while maintaining high standards of operational health. It requires breaking down traditional organizational barriers and fostering a shared understanding of reliability goals.

Pillar Three: AI as an Accelerator - Leveraging Intelligent Automation for Smarter Operations

Now we reach the exciting frontier – using artificial intelligence (AI) not just as buzzword fluff, but as genuine helpers in managing complex cloud systems. This isn't about replacing human operators overnight with robot overlords; it's about augmenting their capabilities and freeing them from tedious tasks.

AI-Powered Observability

Observability is the core of understanding system health:

Anomaly Detection: Instead of just looking at static dashboards, use machine learning models to identify unusual patterns in metrics (latency spikes, error rate deviations) or logs. This helps detect subtle issues before they become major problems.

Predictive Failure Analysis: AI can analyze historical data and current trends to predict potential failures – like a system anticipating its own plumbing leak long before it happens.

Intelligent Automation

Basic automation tools exist (like triggering deployments based on tests). But AI can take this further:

Automated Root Cause Analysis (RCA): Imagine your incident response tool not just identifying an outage but suggesting why it happened, correlating multiple data points automatically. This saves precious investigation time during a crisis.

Autoscaling Optimization: Use ML models to predict future load and adjust autoscaling thresholds more intelligently than simple CPU/RAM metrics allow.

Augmenting Human Decision-Making

The most powerful application might be cognitive assistance:

Service-Level Target Calculation Suggestions: AI can analyze SLO data with various weighting factors (severity, business impact) and suggest optimal target levels based on historical performance.

Cost Optimization Analysis: Instead of manually combing through cost reports, use AI to identify wasteful resource usage patterns or opportunities for infrastructure optimization without sacrificing reliability.

The key is responsible integration – view AI not as magic bullet but as another tool requiring human oversight. It automates simple tasks and enhances complex ones, ultimately empowering teams with better information faster.

Putting it Together: A Blueprint Example Across Company Sizes (Startup to Enterprise)

Let's see how these pillars translate into practice at different stages of organizational growth:

Small/Startup Environment

Technical: Focus on foundational CI/CD – maybe Jenkins or GitLab CI for code, Terraform for basic infrastructure setups. Start with core observability tools like Prometheus/Grafana and ELK stack.

Team: Empower a small cross-functional team to own both development and operations aspects initially. Foster an SRE culture through direct experience (blameless postmortems). On-call rotation is simple but needs consideration for founders' workload.

AI: Use it primarily for anomaly detection in logs or metrics as soon as data volume allows.

Medium-Sized Company

Technical: Invest more heavily in service meshes, robust CI/CD covering infrastructure validation, and standardized observability (consistent SLOs across services). Explore Infrastructure-as-Code best practices.

Team: Formalize roles – maybe separate platform engineering from application teams. Implement structured on-call rotations and dedicated SRE capacity. Foster a culture of shared responsibility through documentation and knowledge sharing platforms.

AI: Begin experimenting with AI-driven autoscaling and predictive failure analysis in less critical systems.

Large Enterprise

Technical: Mature multi-cloud environments require sophisticated automation – perhaps an enterprise-grade IaC platform, complex CI/CD pipelines involving security (SAST/DAST), compliance checks. Standardized observability across diverse services is key.

Team: Dedicated SRE teams with formal responsibilities and tooling for managing on-call intensity. Strong feedback loops between operations and development groups essential. AI integration should be widespread but carefully governed.

AI: Fully leverage AI for predictive maintenance, intelligent log correlation, automated incident handling components (like initial triage), and service-level optimization across the entire portfolio.

This transformation isn't linear – you can pick and choose which aspects to develop first based on immediate needs or business priorities. The framework provides a guiding structure regardless of your current state.

Overcoming Common Hurdles in Your Cloud/SRE Transformation Journey

Let's be realistic. This path is rarely smooth. Here are some common roadblocks we encounter:

Resistance to Change and Blameless Culture

This happens everywhere – even within the ranks of seasoned IT professionals. The "but I built it" mentality needs constant challenging.

Solution: Lead by example, consistently practice blamelessness yourself. Frame SLOs as shared goals rather than targets exclusively for ops teams.

Tip: Start small with a pilot project to demonstrate benefits before tackling the entire organization.

Skills Gaps and Finding Talent

Good cloud/SRE engineers are scarce – especially those combining deep technical knowledge (DevOps, Networking) with operational thinking. And finding people comfortable working across traditional dev/ops boundaries can be tough.

Solution: Invest heavily in training and development programs within your existing teams first. Cross-train developers on basic infrastructure concepts.

Tip: Look beyond the obvious job titles – maybe a brilliant backend engineer is perfectly suited to an SRE role if they have strong monitoring and automation aptitude.

Data Silos and Poor Observability

Getting visibility into complex distributed systems requires data from various sources (logs, metrics, traces) often living in different silos. This makes root cause analysis incredibly difficult.

Solution: Implement standardized logging, metric collection, and tracing across all services from day one. Use tools that facilitate this.

Tip: Don't wait until you have a perfect monitoring tool – start with basic dashboards for critical metrics even if they aren't fully integrated yet. The goal is continuous improvement.

Balancing Automation with Control

While automation streamlines operations, there's always the fear of losing control or creating unexpected problems through scripts and tools.

Solution: Start with simple automations (like automated testing) before moving to more impactful ones like infrastructure provisioning via IaC. Maintain robust monitoring of the automation itself.

Tip: The principle is "Automation should not replace security review," especially initially. Require human approval for certain high-risk changes.

Managing On-Call Fatigue

Constantly dealing with incidents, especially during critical business hours or night shifts, can lead to burnout quickly if not managed properly.

Solution: Implement a fair rotation system, clearly define incident response levels (escalation paths), and utilize tools that help document impact accurately. Crucially, schedule regular breaks from on-call duties for team members.

Tip: Encourage teams to proactively reduce the likelihood of incidents causing significant pain before they even happen.

Key Takeaways

Cloud-native scaling requires more than just technical plumbing – it demands a strategic balance between robust automation (CI/CD, Observability) and mature operational leadership focused on team dynamics.

Technical excellence alone is insufficient; fostering an SRE culture through shared responsibility, blameless postmortems, fair rotations, continuous improvement loops, and cross-training is non-negotiable for success at scale.

AI should be viewed as a powerful augmentation tool rather than a replacement. Its integration requires clear goals, careful implementation, and responsible governance to enhance operational efficiency without creating new problems or dependencies.

The transformation journey varies by organization size but follows the same core principles of robust technical foundations combined with healthy team dynamics and progressive AI adoption.

Be prepared for hurdles – embrace them as learning opportunities. Consistent application of these three pillars will gradually bend the curve of complexity, leading to more reliable services delivered faster and empowering your teams effectively.

So, let's build this future together. Remember that the most resilient infrastructures are those designed not just with technical rigor but with human considerations at their core.

Comments